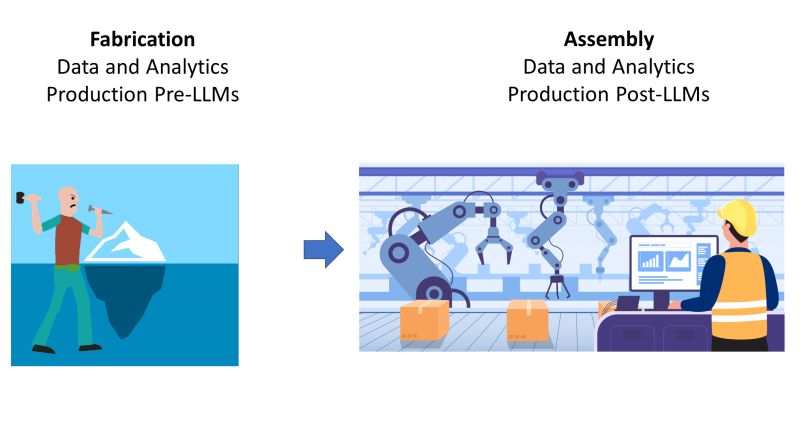

We are about to enter the assembly age in data and analytics. The time of "fabricating" everything, like pipelines, platforms, and catalogs, is about to change. What does this mean for the modern data ecosystem and data products?

A Seismic Shift

Like Pompeii, D&A teams, and indeed all tech teams, are about to experience a seismic shift in how data, analytics, and tech, in general, are produced. This change has been a long time coming. Many companies have been in fabrication mode—engaged in a lot of non-business facing bespoke work, such as modeling, pipelines, and data sets, still largely from scratch. Modern tools and technologies, like the cloud, have made the fabrication process easier, but there's still a lot of low-level bespoke work being done.

The Rise of Automation

Firms need skilled fabricators—data scientists, data engineers, etc.—and all are competing for the best talent. There has been much talk about data being the new oil or some other valuable commodity, but this analogy misses the fact that there is no standardization of data as components. Two companies' data sets are still largely different, even if they represent the same business processes.

Large language models (LLMs) and generative AI are about to change that. What they are doing is componentizing, not the data, but the solution. For example, an LLM can be asked to create a sales forecast based on sales numbers, and it will generate the forecast model directly from the data. The automatic generation of a common analytics model, along with the ability to automatically introspect the data, is the actual commodity.

Streamlining the Process

For most organizations, there are only a few analytical model types that drive 80% of core decision-making, but many are still stuck in the fabrication process, spending millions on building data platforms and struggling to hire good fabricators. This situation is similar to the transition from traditional scribes, who took years to create a book, to the printing press, which could create similar outputs in no time at all.

What does this assembly line look like?

- Hypothesis: Formulate a hypothesis about the business problem.

- Value Assessment: Evaluate the potential value of addressing the problem.

- Prioritization: Prioritize the hypothesis based on value and feasibility.

- Prototype: Use LLMs to define the model and utilize the data in minutes, not weeks or months.

- Market/Customer Testing: Test the prototype with real market or customer data.

- Measurement: Use LLMs to quickly suggest and create the necessary metrics.

- Production: Move to production in a much shorter timescale.

- Monitoring: Continuously monitor the results.

- Iteration: Quickly and easily revisit any stage as needed because the tech and data production are accelerated by LLMs.

The Future of Data Products

For the modern data ecosystem and data products, this shift is a positive development. One will be able to have a tight, fast OODA loop to create, test, and productionize genuine data products driven by business use cases. However, there are still significant questions about the transparency and robustness of LLMs that need to be addressed. But this change is happening now, and we need to embrace it.

The Human Factor

While LLMs open up a level of democratization in analytics by componentizing solutions, there remains a critical human factor. Business leaders need to identify and frame problems effectively, which can still be challenging. LLMs can assist by providing information on well-known business scenarios, but businesses must create their hypotheses either independently or with subject matter expert (SME) guidance.

The acceleration provided by LLMs should streamline the process of prototyping, testing, measuring, and iterating without large budgets or programs. This approach also allows for quick diagnosis of business pain points, driving the creation of hypotheses for remediation.

The Economic Impact

The economic effects on the data science profession could be significant. LLMs are expected to alter pricing and compensation for many kinds of human labor, including data science. The impact is comparable to the industrial revolution, where the nature of work and business operations underwent a dramatic transformation.

Practical Applications

To leverage LLMs effectively, businesses need to break down problems and feed each step as a prompt into the LLM, assuming it is trained on a corpus that understands the specific business context. For example, a bank calculating a simple credit score could use prompt engineering to create the necessary models and API calls efficiently.

Moving Forward

As organizations transition to this new paradigm, they need to ensure transparency and robustness in their use of LLMs. The shift towards assembly line processes in data and analytics represents a major evolution, promising faster, cheaper, and more reliable business insights.

The challenge lies in integrating these advanced capabilities into existing business processes and ensuring that the human elements of decision-making and problem-solving are not lost in the automation. By focusing on continuous improvement and leveraging the latest technologies, businesses can stay ahead in the rapidly changing landscape of data and analytics.