There are lot of enterprise Chat Bot solutions that are in the market today. Vendors typically include the tech giants, professional service companies and traditional software vendors.

* There are many terms to describe this type of solution e.g. Cognitive/Virtual Assistants, dialogue system. But for this article I will use the term Conversational Agent or Agent for short.

This post attempts to cover some of the aspects of what makes up an Enterprise class Conversational Agent i.e. a solution that runs as part of a company's day-to-day processing.

It doesn't try to be an exhaustive critique of the natural language processing, machine learning or artificial intelligence domains (as you would be reading this for months) but it tries to demystify some of the more common aspects.

A Conversational Agent can be described as, a computer program designed to simulate conversation with human users using artificial intelligence. Currently the Enterprise market has largely goal and question-and-answer orientated has solutions, rather than products that try to pass the Turing test (the test of a machine's ability to exhibit intelligent behaviour equivalent to, or indistinguishable from, that of a human)

Typical use-cases include:

1. Call centre service resolution agent (to handle operational and administration queries e.g. what has happened to my payment etc...)

• The business cases here would be to reduce headcount or scale existing resources in the call centre by fielding a percentage of calls with the Conversational Agent solution.

2. Client self-service ofoperational process, whereby the Conversational Agent is helping a client navigate a process with a company (e.g. helping to on-board a client in order to transact)

• The business case here is typically involves speeding up the processing time or reducing the cost of the process by allowing the Conversational Agent to perform the task instead of a human and/or in a faster way.

3. Fielding queries on a companies information (products, processes and client information) by a client or a relationship manager on behalf of a client (e.g. How much is the rate on particular type of loan for this client in this jurisdiction)

• This can have the same business impact as the first use-case i.e. reduction of head count or scaling up service with existing head count but with the added value of making a lot of the companies data available in a much more user friendly way.

Problem Space

There are, of course many other use-cases than the ones detailed here (new ones appearing all the time) but the problem space they are try to solve into tends to be in the following areas:

• Process flow - Following a prescribed process whereby the bot is taking the user through a sequence of well-known steps in order to achieve a specific outcome.

• Generalised Information retrieval - whereby the Conversational Agent is searching, compiling and presenting answers to questions from a large corpus of data from a number structured and unstructured sources e.g. documents, intranets, web sites, databases etc...

(The reality is the two problem spaces are actually a continuum that form a scale of sophistication, whereby Conversational Agent solutions are positioned at different positions along this line)

Functional Break down

In order to accept user questions and converse, the Conversational Agent needs to do a number of activities. Given both problem spaces, the initial steps in tend to be common.

Semantic Parsing

The first step involves natural language processing of user interaction - for most solutions this is an instant messenger style interface although there are vendors that layer avatars and speech to-text components on top to give a more natural and human user experience. It's interesting to note that there have been cases where the user experience has produced unexpected side effects.

This step has the job of capturing, parsing, breaking down the text input from the user into semantic parts i.e. pieces of data that the Conversational Agent can use to query its knowledge base. This typically involves the use of natural language processing techniques that include the following:

1- Tokenisation and sentence detection - breaking the text in to words, phrases and sentences

2- Stemming and Lemmatisation - removing inflectional endings only and to return the base or dictionary form of a word e.g. both "pulling" and "pulled" to "pull" and both "Went" and "going" to go. This is used to match with terms in the knowledge base and is much easier to match similar meaning words rather than having many synonyms.

3- Part-of-speech tagging and/or semantic text analysis - where by the text is turned into effectively a query structure

e.g. "How much is the rate on structured loan in Switzerland" would be parsed as:

1. "How" is a quantity operator,

2. "rate" is the property of the subject

3. "structured loan" is the subject to be queried

4. "Switzerland" is a qualifier i.e. filter criteria to be applied

4 - Entity identification - This where things get really interesting. Once the main subject of the query has been ascertained then next step is to match it to something the Conversational Agent knows about. The key algorithms used to perform this typically fall into one or more of the following:

1- Intent detection and classification - this is the process of categorising the subject and qualifiers into known buckets (classes) of words e.g. the user wants to know about various types of payments.

2- Sentiment analysis - is where the users query is tested to see if its positive, negative or neutral

3- Named-entity recognition - extraction of proper nouns (e.g. names of people, places etc...). The methods applied here are usually based on linguistic grammar-based or machine learning models.

(This isn't an exhaustive list as there are a number of other aspects that can be extracted)

Machine learning algorithms tend to be used extensively here and can range from simple techniques like bag-of-words and Naive Bayes to more complex approaches like and deep learning (e.g. neural networks)

Keeping Track

The next component is the dialogue engine. This is the heart of the solution and keeps track of the conversations and its context and state.

Here is an example.

I'm having a conversation about payments information and I ask an unrelated question like "what is my current balance on my current account".

Once the Agent has answered, I then return to the payments line of questioning

The Conversational Agent should be able proceed as if nothing is happened and can carry on answering subsequent questions around payments in the conversation without having to start again.

The techniques employed here typically use graph based data structures to store the current state of the conversations in play i.e. each question answer in the conversation flow and context information.

Context data typically includes things like which domain is the conversation in (e.g. payments) and other meta-data that has been captured as part of the interaction, like the users name, account details etc...

Providing the Right Answer

Now that we have our query structure, we need to use this to probe our knowledge base to construct a response. In practice this step is integrated to the previous steps but I have separated it for clarity.

How this is achieved depends on the class of problem that the Conversational Agent is trying to solve.

Process Flow Use-Cases

For process flow type use-cases, Conversational Agent solutions typically query a graph of terms that have been constructed in prescriptive manner as linear sequence.

For the customer on-boarding use case a flow structure would be created by person using visual graphical interface that would describe the data to be captured in sequence e.g. capture the customer name, followed by the address, bank statements, etc....

Some solutions use advanced techniques to assist this process which typically include the following:

a) Having a historical store of questions and conversations

b) Including advanced intent detection using training sets.

One effect of this approach can be semantic precision is sacrificed as questions are forced into rigid form that mean Chabot is confounded if a sufficiently ambiguous question is asked.

Another important piece of functionality, that a lot of solutions include, are steps in the flows can call out to APIs rather than just contain data e.g. "what is the weather in London" could call out to a weather API to deliver the answer "29% C" (yes, I know 29%C in London is wishful thinking).

This works really well when there is a well-defined (and fairly simple process) that can easily be followed by a state-machine, but can be very challenging if this approach is applied to the general information retrieval problem space.

Generalised Information retrieval

For the problem space of generalised information retrieval, a different set of techniques (as alluded to previously) are required.

Why is this so different?

Imagine you have corpus of millions of instances of documents that all contain rich textual data that, in turn, have many terms that are linked between documents.

This problem is challenging in a number of ways:

Firstly; if you were to build a flow structure (as in the process flow approach) then one would spend vast amounts of time extracting all the terms and joining them together in all the varied meaningful ways in order to be able to answer many types of disparate questions (even with machine learning assistance this would be time consuming)

Secondly; if the question is semantically ambiguous or veers far from the flow structure then the Conversational Agent would struggle to match the question to one of the flows.

Thirdly; actually, extracting meaningful text from complex sources, such as tables in documents, in automated way is actually very difficult (who knew? But will explain later). So, this would mean a human would have to invest large amounts of time to define and refine. This fine if one has a small set of simple tables but, it breaks down if the set grows large or the tables are constantly changing or are very large and/or complex.

So how is it typically done?

Ingest the Data

The first step is to connect to the data sources to ingest and semantically parse the corpus of data in an automated way. This is a classic I.T. system integration problem and most Agents don't include comprehensive enterprise integration frameworks (that typically include many connectors for different applications and technologies). One has to write a custom connector that task to something like a web service. A notable exception is the Micro Focus IDOL platform which can connect to many types of sources with its connector framework.

This data typically comes in the form of unstructured digital documents e.g. PDF document files, Web Sites, news feeds, Microsoft Office docs (including excel), emails as well as social media and voice, image and video data. For these latter types, speech to text and image processing components need to be included (again machine learning techniques, like neural networks can shine here).

The more sophisticated Agent solutions can also include the ingestion of structured (e.g. database result sets) and semi structured (e.g. JSON, XML etc...) which is a very useful ability that allows much more traditional data of the Enterprise to be included.

Build the Knowledge Graph

Now, once we have connected the sources we need to extract and classify the data in a generalised way so that the Conversational Agent can be asked a wide set of questions with high levels of ambiguity.

We will still need to start with some structure to form the basis of the classification the knowledge base so it can be linked to the intent of the conversations. At this time, in the industry, there still needs human input to achieve this. Automatically creating a computer readable structure from documentation is extremely difficult for deep domain use-cases (i.e. complex hierarchies of highly contextual linked terms and phrases).

Solutions in this space build what is known as a knowledge graph. This is a structure that stores all the facts in a graph with semantic links between them that represent the domain that the Conversational Agent solution can answer questions about.

Approaches, tend to employ the use of a taxonomy or an ontology to form the basis of the graph. These are simply key terms, phrases and other contextual data that is structured with linkages between them. E.g. Payment links to BACS Payment that links to BACS payment processes. They are guides to allow the Conversational Agent to understand which domain the user is asking about and what can information can be returned.

Fact Extraction

Once you have a taxonomy defined you will need to train the system link the information in the corpus to it. To do this firstly;

Facts, terms, phrases and names, numbers, together with associated information (e.g. loan data with interest rates) need to be extracted from the raw text in the documents

This include extracting facts from tables as well as the body of the text.

This turns out to be quite tricky do perform in a generalised way (i.e. to be able to automate the process as much as possible for many different table structures).

Firstly the table has to be recognised as a table. Sounds easy but in some document formats (e.g. PDF) the identification process sometimes has to detect the lines around the table. Obviously for marked up formats like HTML this isn't required. But this is going into the OCR (optical character recognition) world and which is a whole discipline in its own right.

Now let's assume you have managed to identify the raw table structure the next is to extract the facts out.

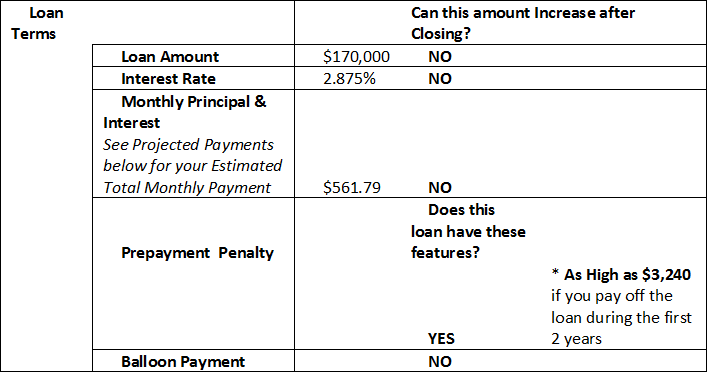

Take the following table:

You can see there are multiple facts buried in individual cells and under headings in the same cell. Now it's possible to create a simple rules to extract facts and link them to each other for this particular table but to create a general solution requires a range of many different techniques sometimes used together.

Knowledge Engineering

Next, once you have created facts you need to train the intent detection from a training set (derived from the corpus) be able to classify the user questions and be able to match them to facts. Typical methods for intent detection are Naive Bayes (NB) and Support Vector Machines (SVM) or, if you have a very large and varied training set, a deep learning approach can be employed.

This task is still not to be underestimated and usually an iterative process comprised of; ingesting the data, indexing (i.e. building the fact base), training the intent and tuning the taxonomy/ontology. This is all done whist interrogating the Conversational Agent until the responses are tuned to give the best desired outcome. This is a major part of any solution and really impacts the quality of the whole solution. I.e. the more time spent here the typically better the interaction and the faster the route to the desired response for each conversation.

A really good technique that is typically employed is to store and reuse the actual conversations to improve the hit rate for questions. This is achieved by a) storing new facts in the taxonomy user input and b) for the intent training, to improve the classification process.

Getting a Human Answer

Once the intent is trained then the next step is to bring back the information in a human friendly form. One could have the answer to "what is the current temperature" as simply "29ºC" but that is not providing a great user experience. A better answer would be "The current temperature in London is 29 degrees Celsius" to give a more human response.

This requires a natural language generation feature whereby the Conversational Agent turns structured fact data into written narrative, writing like a human.

This is where natural language generation comes to play. A simplistic approach would be to use templating i.e. have hard coded sentences that have place holders e.g. "the current temperature in %1 is %2 degrees Celsius" where %1 and %2 are simply replaced with the facts "London" and "29".

A more advanced method to be employed is a trainable sentence planner as described in this paper [2004, Walker et al] that typically follows the steps, below:

1. Content determination - what you're going to say, based on the facts of the answer and user's expectations

2. Sentence planning - how are you going to say it.

3. Surface realisation - the final output, using specific words, making sure sentences follow each other nicely and so on. This is the style or flow.

This can break down into smaller stages (as shown here), but it's essentially the same approach.

The other aspect to be considered is if the answer brings back multiple disparate facts these need to be strung together as meaning full sentences. I.e. if the intent matches multiple facts then the Conversational Agent needs to create natural language response that makes sense. E.g. "What is my market exposure to Greece" which could yield, "You currently have 100 Million Euros of Greek government bonds, and 50 Million Euros of stock in Aegean Airlines". As you can see the response facts are wrapped in English language that was created by the CAs natural language generation component.

If a direct fact cannot be found then another technique is to use passage extraction which tries to highlight the most relevant fragments of text in paragraphs of the corpus.

This is known as passage extraction. The goal here is to return a passage of text that contains syntactically looks like a possible answer to the question so that it is likely to be answered indirectly.

This is generally more associated with search and document indexing solutions but, if combined with the Conversational Agent system can be a powerful aid to solving the information retrieval problem. It can also be enhanced with a linkage from the knowledge graph to use the documents that have been ingested to create the facts. To do this means that the Conversational Agent solution really needs to contain a document/content management functionality. Most Conversational Agent solutions on the market today don't include this (except the Micro Focus IDOL platform), but is a really important component, and one that is often overlooked.

Summary

As you can see there a number of components required in order to create an industrial Conversational Agent. One thing I would say it's really important not to underestimate the journey to producing an enterprise class experience.

Once you have decided to enter into the Conversational Agent world (although you may already have started) it's critical that you set some simple clear objectives at your first outing and have really good people who can build the knowledge base. This includes really good SMEs and resources who understand non-traditional I.T. methods such as NLP and machine learning.

These types of solutions are rapidly becoming a key component of a company's data and I.T. infrastructure and there have been many cases of programs not succeeding due to the space not being understood.

I hope this has given you some insight into the space and I would really like to hear your comments around your journey building these types of solutions.